There's no word when these new features will come to Mac and iOS devices, but we're hoping to hear more at WWDC 2022.

|

| Image: Apple |

Apple’s WWDC annual developer conference is fast approaching, so get ready for tons leaks and reveals in anticipation of what’s to come from Cupertino. This latest preview comes directly from Apple, in a tidy press release offering details on the company’s new accessibility features, coming soon to iOS.

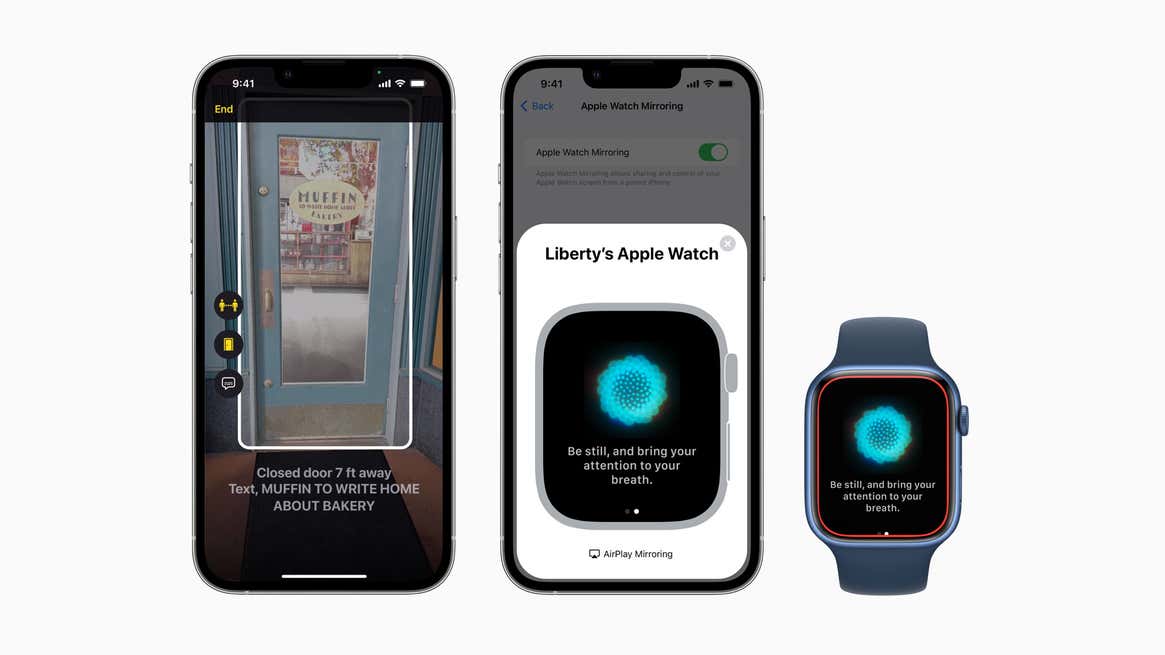

First of the significant feature offerings will be Door Detection, which sounds like it’ll do what it advertises. Users with physical and motor disabilities will be able to use the built-in Magnifier app to measure how far a door is from their person and whether it’s open or closed. The app will also help folks with vision impairments to scan the door for the business name and opening hours. The feature will only be available on iPhone and iPad devices with a built-in LIDAR Scanner, including the iPhone 13 and 12 Pro series and the iPad Pro 11-inch.

Next up, Apple will add Apple Watch Mirroring in future versions of iOS. The feature will enable people to see and view their respective Apple Watch screens through a paired iPhone. The idea is to help those who find the Apple Watch’s under-two-inches display too small to interact with. The only caveat is you’ll have to update your Apple Watch to the latest models, as it’ll be available for Series 6 and newer models only.

One of the more compelling accessibility features coming soon is iOS’s ability to offer offline live captions on iPhones, iPads, and Macs. You’ll see a live read-out of spoken words in FaceTime calls, video chats, and social media. The transcription happens all on-device, rather than hitting the cloud and bouncing back to you. Apple also plans to attribute the transcriptions on apps like FaceTime, so that you’re aware of who said what.

Apple’s Live captions are not unlike Google’s Live Caption ability, which is available on Android smartphones, tablets, and Chromebooks. But like the Apple Watch Mirroring feature, it’ll be limited to newer devices, including the iPhone 11 or newer, iPads with the A12 Bionic chip or later, and M1-enabled Macs. That’s because the custom chips featured in those models are what help facilitate this sort of offline transcription capability.

Small, helpful features

A screenshot showing the new book themes

Apple’s new Book themes will make it easier to read on your phone.Image: Apple

Apple will also push through a few accessibility-tinged features that are more subtle and under-the-hood compared to the new Door Detection feature and offline captions.

There will be new themes for Apple Books users that let you choose between more background colors and spacing options. This should be helpful to folks with vision impairments and even to folks like myself who suffer from chronic headaches as a result of an overly-bright blue screen.

Apple’s VoiceOver ability, which functions like Android’s TalkBack, will also gain support for an additional 20 languages and locales. This feature declares what each of the user interface elements is, including buttons and menu items. Apple currently supports 30 languages for VoiceOver on the iPhone and iPad. The additional languages will make it available to more users around the globe.

Tags:

Apple